Latest News

Enhancing Graph Contrastive Learning with Reliable and Informative Augmentation for Recommendation

Bridging Textual-Collaborative Gap through Semantic Codes for Sequential Recommendation

KG-Agent: An Efficient Autonomous Agent Framework for Complex Reasoning over Knowledge Graph

LongReD: Mitigating Short-Text Degradation of Long-Context Large Language Models via Restoration Distillation

Towards Effective and Efficient Continual Pre-training of Large Language Models

YuLan-Mini: Pushing the Limits of Open Data-efficient Language Model

Think More, Hallucinate Less: Mitigating Hallucinations via Dual Process of Fast and Slow Thinking

Unlocking General Long Chain-of-Thought Reasoning Capabilities of Large Language Models via Representation Engineering

Unveiling Knowledge Utilization Mechanisms in LLM-based Retrieval-Augmented Generation

Search-Based Interaction For Conversation Recommendation via Generative Reward Model Based Simulated User

LLM-based Search Assistant with Holistically Guided MCTS for Intricate Information Seeking

Generative Recommender with End-to-End Learnable Item Tokenization

UFIN: Universal Feature Interaction Network for Multi-Domain Click-Through Rate Prediction

Mix-CPT: A Domain Adaptation Framework via Decoupling Knowledge Learning and Format Alignment

NPTI:Neuron Based Personality Trait Induction in Large Language Models

Investigating the Pre-Training Dynamics of In-Context Learning: Task Recognition vs. Task Learning

DAWN-ICL: Strategic Planning of Problem-solving Trajectories for Zero-Shot In-Context Learning

RAG-Star: Enhancing Deliberative Reasoning with Retrieval Augmented Verification and Refinement

Frequency-Augmented Mixture-of-Heterogeneous-Experts Framework for Sequential Recommendation

Self-Calibrated Listwise Reranking with Large Language Models

Unleashing the Potential of Large Language Models as Prompt Optimizers: An Analogical Analysis with Gradient-based Model Optimizers

发表论文:任瑞阳同学论文被 LREC-COLING 2025 录用

Investigating the Factual Knowledge Boundary of Large Language Models with Retrieval Augmentation

发表论文:都一凡同学论文被 LREC-COLING 2025 录用

What Makes for Good Visual Instructions? Synthesizing Complex Visual Reasoning Instructions for Visual Instruction Tuning

JiuZhang3.0: Efficiently Improving Mathematical Reasoning by Training Small Data Synthesis Models

Exploring Context Window of Large Language Models via Decomposed Positional Vectors

Images are Achilles' Heel of Alignment: Exploiting Visual Vulnerabilities for Jailbreaking Multimodal Large Language Models

Small Agent Can Also Rock! Empowering Small Language Models as Hallucination Detector

REAR: A Relevance-Aware Retrieval-Augmented Framework for Open-domain Question Answering

Not Everything is All You Need: Toward Low-Redundant Optimization for Large Language Model Alignment

BASES: Large-scale Web Search User Simulation with Large Language Model based Agents

Unveiling the Flaws: Exploring Imperfections in Synthetic Data and Mitigation Strategies for Large Language Models

AuriSRec: Adversarial User Intention Learning in Sequential Recommendation

Scaling Law of Large Sequential Recommendation Models

Promoting Two-sided Fairness with Adaptive Weights for Providers and Customers in Recommendation

Rotative Factorization Machines

Revisiting Reciprocal Recommender Systems: Metrics, Formulation, and Method

The dawn after the dark: An empirical study on factuality hallucination in large language models

Language-specific neurons: The key to multilingual capabilities in large language models

Unlocking Data-free Low-bit Quantization with Matrix Decomposition for KV Cache Compression

Data-CUBE: Data Curriculum for Instruction-based Sentence Representation Learning

Improving large language models via fine-grained reinforcement learning with minimum editing constraint

LLMBox: A Comprehensive Library for Large Language Models

Sequence-level Semantic Representation Fusion for Recommender Systems

EulerFormer: Sequential User Behavior Modeling With Complex Vector Attention

Privacy-Preserving Cross-Domain Recommendation With Federated Graph Learning

Adapting Large Language Models By Integrating Collaborative Semantics For Recommendation

Not All Metrics Are Guilty: Improving NLG Evaluation By Diversifying References

发表论文:成晓雪同学论文被 LREC-COLING 2024 录用

ChainLM: Empowering Large Language Models with Improved Chain-of-Thought Prompting

发表论文:董梓灿同学论文被 LREC-COLING 2024 录用

BAMBOO: A Comprehensive Benchmark for Evaluating Long Text Modeling Capacities of Large Language Models

发表论文:刘沛羽同学论文被 LREC-COLING 2024 录用

Enhancing Parameter-efficient Fine-tuning with Simple Calibration based on Stable Rank

发表论文:刘沛羽同学论文被 LREC-COLING 2024 录用

Do Emergent Abilities Exist in Quantized Large Language Models: An Empirical Study

AgentCF: Collaborative Learning with Autonomous Language Agents for Recommender Systems

Dense Text Retrieval based on Pretrained Language Models: A Survey

Evaluating Object Hallucination In Large Vision-Language Models

HaluEval: A Large-Scale Hallucination Evaluation Benchmark For Large Language Models

Rethinking The Evaluation For Conversational Recommendation In The Era Of Large Language Models

StructGPT: A General Framework For Large Language Model To Reason Over Structured Data

ReasoningLM: Enabling Structural Subgraph Reasoning in Pre-trained Language Models for Question Answering over Knowledge Graph

Enhancing Scalability Of Pre-Trained Language Models Via Efficient Parameter Sharing

A Thorough Examination On Zero-Shot Dense Retrieval

ChatCoT: Tool-Augmented Chain-Of-Thought Reasoning On Chat-Based Large Language Models

Evaluating And Improving Tool-Augmented Computation-Intensive Math Reasoning

发表论文:周昆同学论文被 ECML-PKDD 2023 录用

MASTER: Multi-Task Pre-Trained Bottlenecked Masked Autoencoders Are Better Dense Retrievers

Reciprocal Sequential Recommendation

Generative Next-Basket Recommendation

JiuZhang 2.0: A Unified Chinese Pre-trained Language Model for Multi-task Mathematical Problem Solving

Improving Conversational Recommendation Systems via Counterfactual Data Simulation

Small Pre-trained Language Models Can be Fine-tuned as Large Models via Over-Parameterization

TOME: A Two-stage Approach for Model-based Retrieval

Zero-shot Visual Question Answering with Language Model Feedback

The Web Can Be Your Oyster for Improving Language Models

MVP: Multi-task Supervised Pre-training for Natural Language Generation

Learning to Imagine: Visually-Augmented Natural Language Generation

Diffusion Models for Non-autoregressive Text Generation: A Survey

EulerNet: Adaptive Feature Interaction Learning via Euler's Formula for CTR Prediction

Towards a More User-Friendly and Easy-to-Use Benchmark Library for Recommender Systems

Learning Vector-Quantized Item Representaction for Transferable Sequential Recommenders

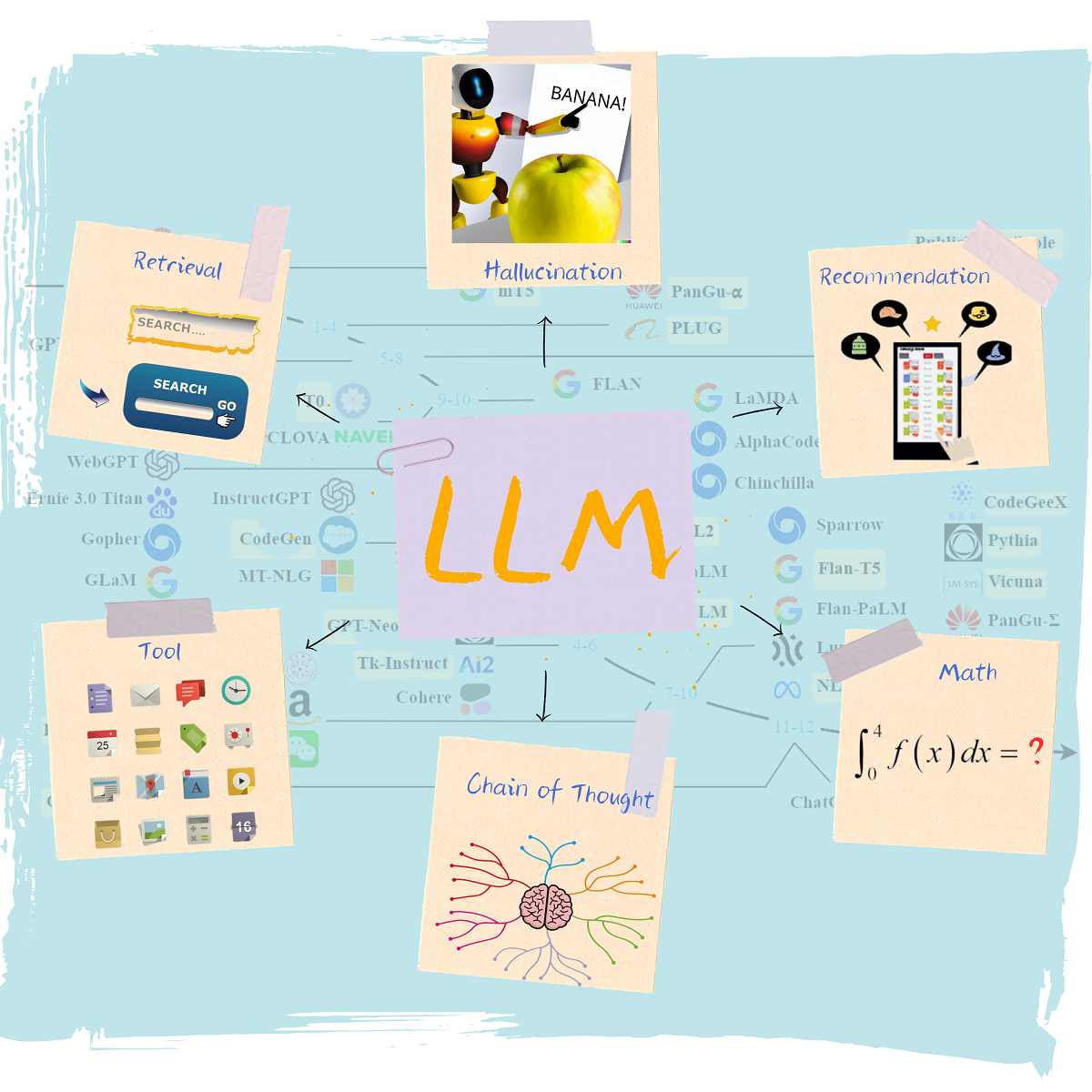

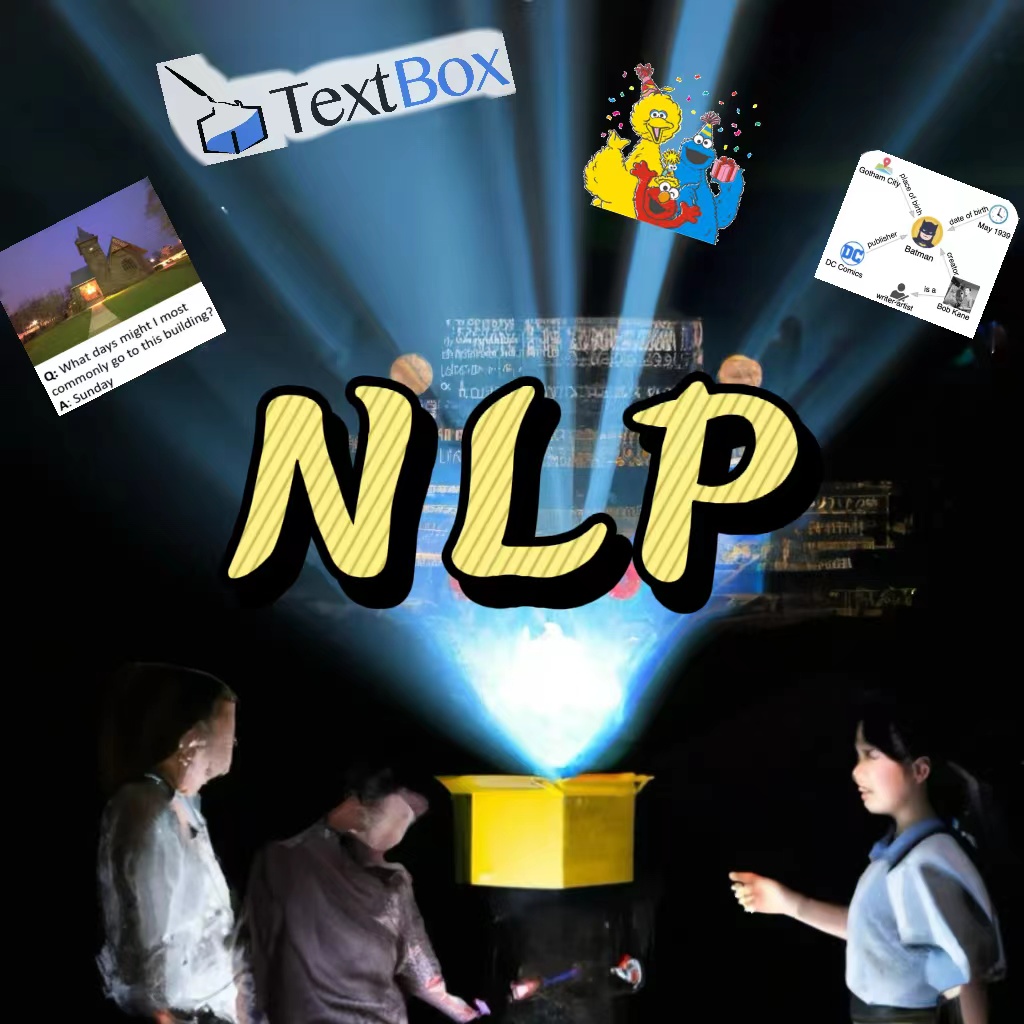

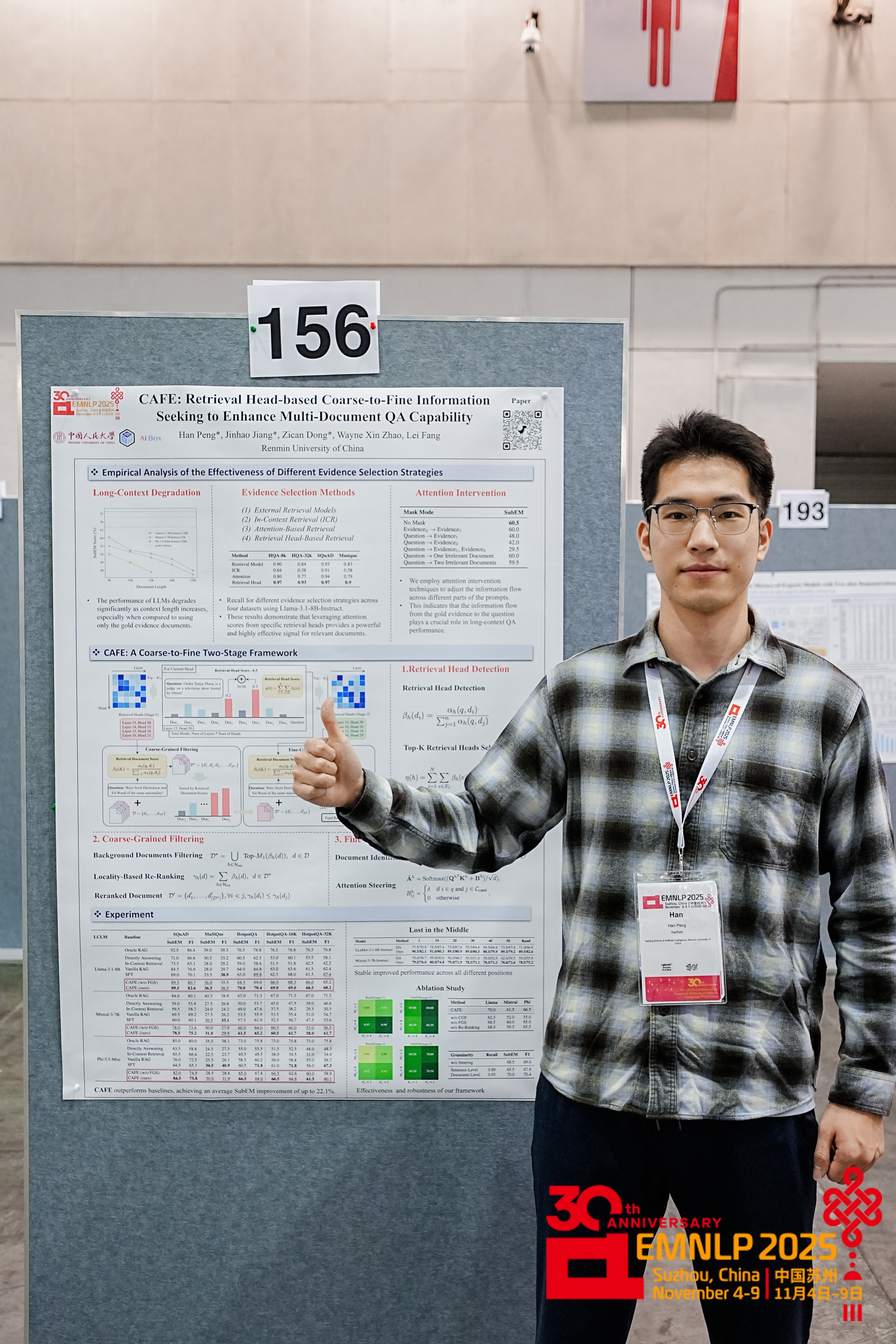

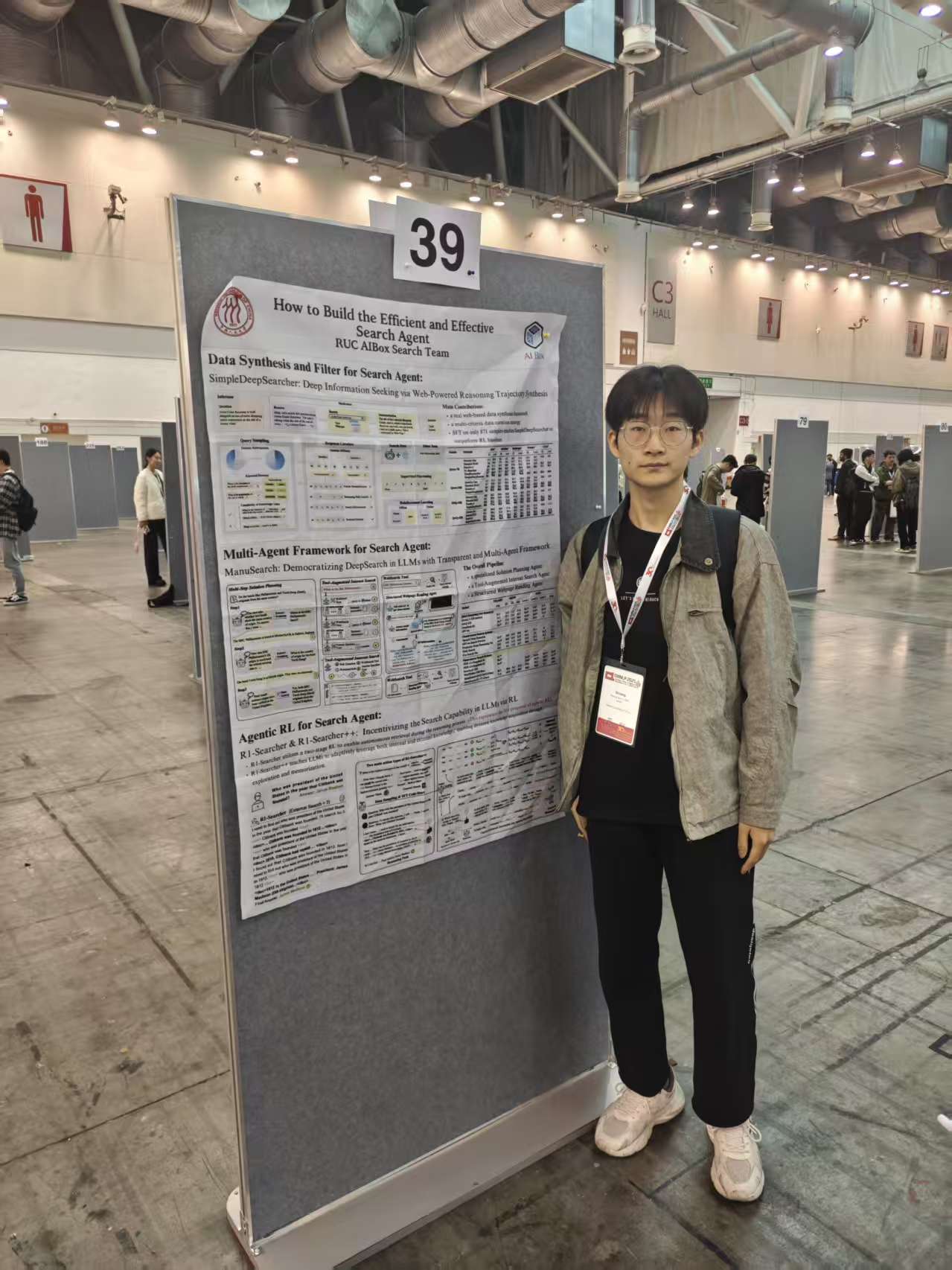

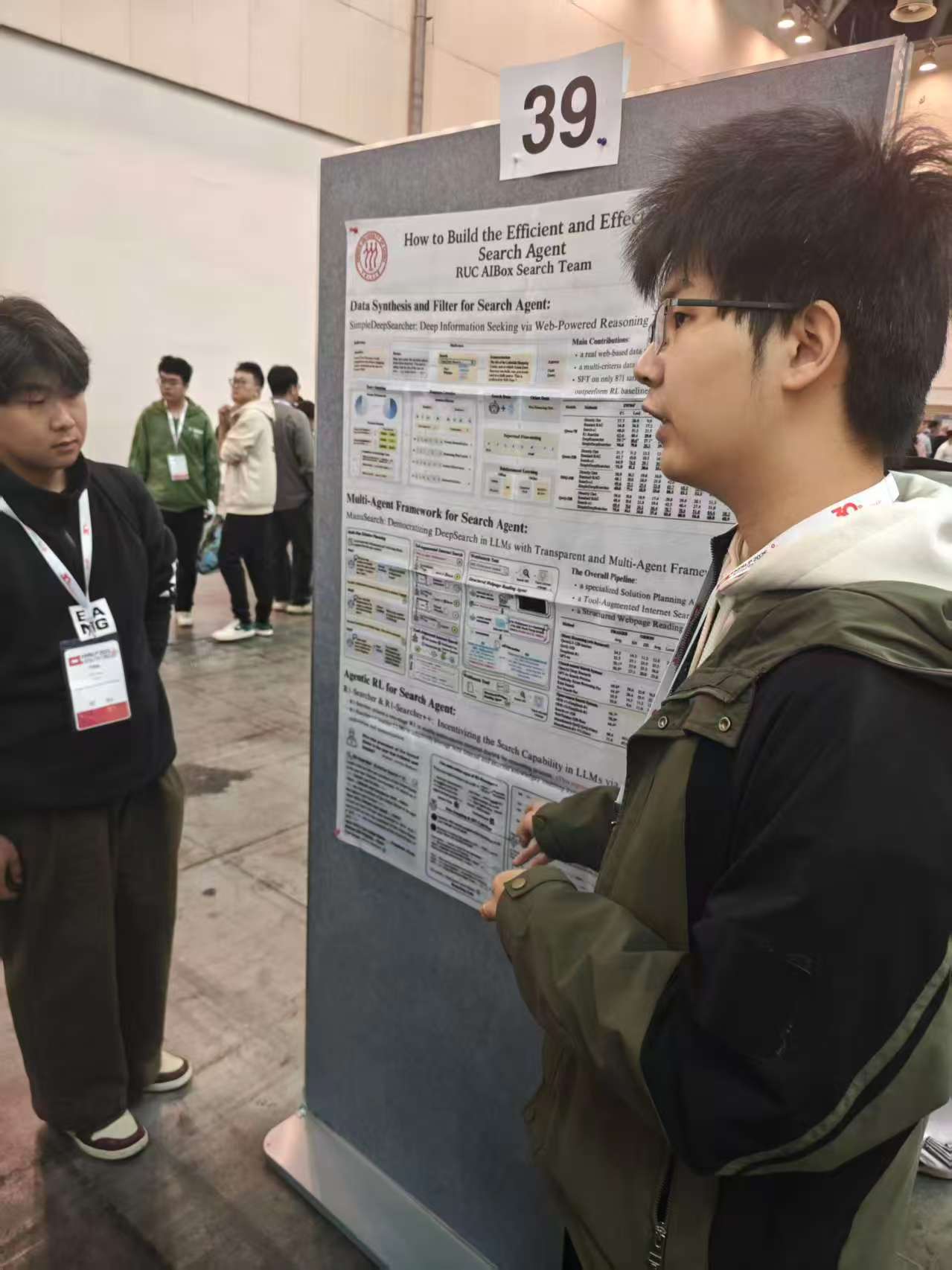

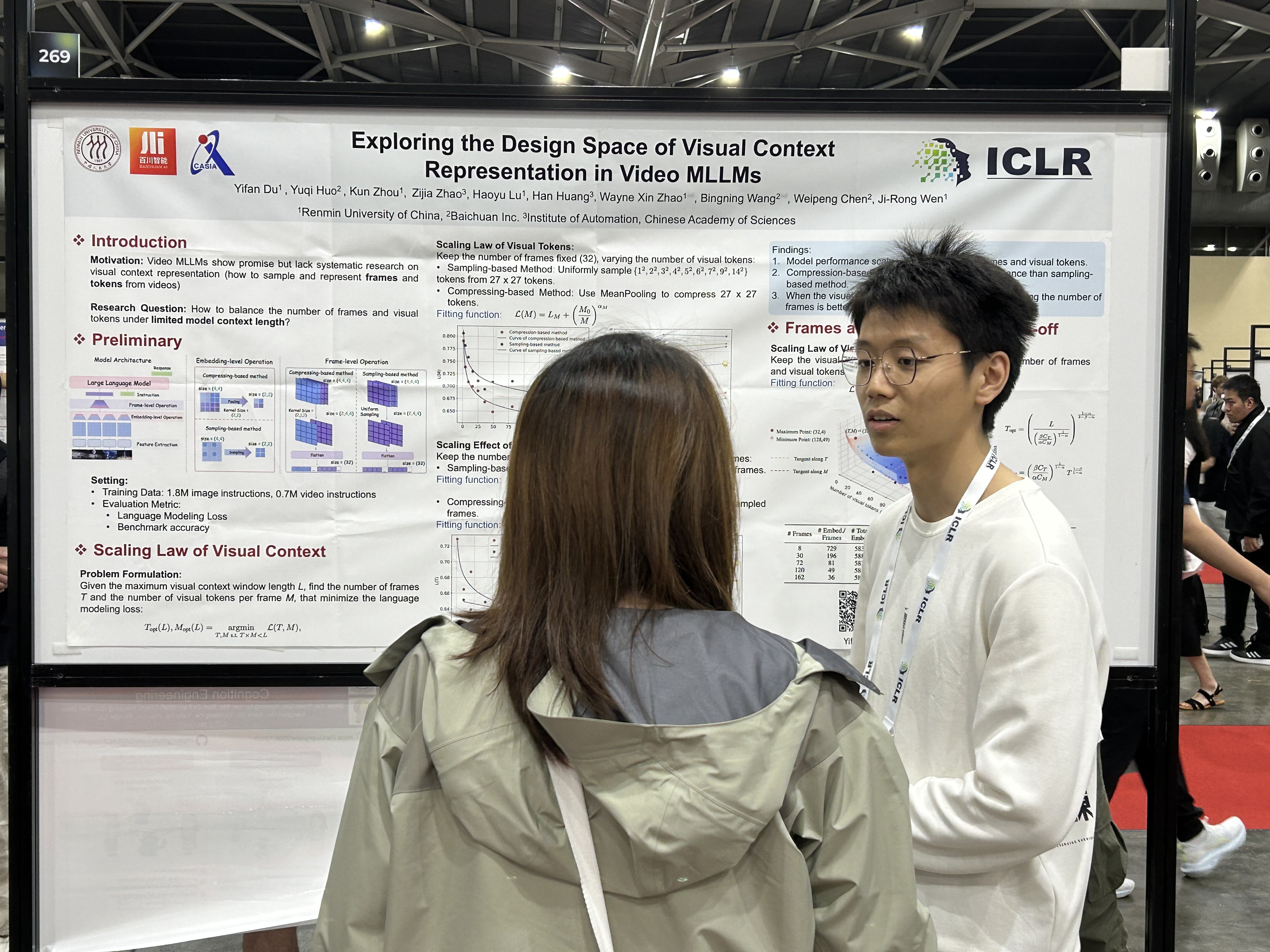

Latest Photos

AI Box Impression

欢迎对我们小组感兴趣的同学通过 batmanfly AT qq.com (赵老师) / rucaibox AT 163.com (小组公邮) 联系我们